Improving resource allocation with algorithms

A deeper dive into Rhema Vaithianathan’s NZAE Conference 2023 keynote

Every now and then, you see a presentation that really sticks with you. For me, Rhema Vaithianathan’s keynote at this year’s NZAE conference was one of those. While I can’t recreate her compelling storytelling, I can do a deeper dive into the research behind the presentation.

In this post, I’m going to dig into the key findings of just one of the papers Prof. Vaithianathan discussed: The Impact of Algorithmic Tools on Child Protection: Evidence from a Randomized Controlled Trial. That paper, by Chris Mills and Marie-Pascale Grimon, offers a powerful argument for using algorithms to inform decision-making.

The premise: How can interventions be best allocated?

We want resources allocated to people when they benefit, or when society benefits. And we want resources to not be allocated to people when they are harmed by that allocation, or when society is harmed. This is the case across many domains of social policy; for example, we don’t want invasive surgery performed that isn’t necessary, but we definitely want it to happen when it is. In criminal justice, we want bail granted when that reduces harm, and not granted when it increases harm.

Resource allocation decisions like these ones can be high pressure, high stakes, and stressful for decision makers. These costs, together with the possibility of erroneous decisions, makes it worth looking for decision-support tools. Mills and Grimon investigated whether an algorithm can help in such a situation, using a tool developed by a New Zealand team and applied in the context of Child Protection Services in the US.

Introducing … Child Protection Services

In the US, Child Protection Services receives 4 million calls per year of alleged child maltreatment. For each call, a decision must be made: to screen in (investigate) or screen out (not investigate). It’s clearly a high stakes situation: failure to investigate could cause continued victimisation and harm, but unnecessary investigation could cause harm through trauma and destabilisation, as well as wasting resources.

One in three children are investigated for child abuse and neglect, or one in two for African-American children1. Under 20% of investigations result in substantiated maltreatment, suggesting initial screening was giving many false positives. At the same time, over 50% of first-time confirmed maltreatment victims were previously reported but not investigated, suggesting the initial screening also delivered many false negatives. The overall pattern suggests sub-optimal resource allocation — and harm.

The decision-making process

A child maltreatment allegation is reported to a hotline worker, who flags about 5% of the calls for an emergency response, and passes the remaining 95% to a team for decision-making. That team decides who to screen out and who to screen in, and they make that decision in about ten minutes. In their study, Mills and Grimon introduced an extra piece of information at this point — the output of an algorithm, trained to predict that child’s likelihood of being removed from the home within two years.

The tool

The algorithm was developed by Rhema Vaithianathan and colleagues at the Centre for Social Data Analytics (CSDA) at AUT. It was trained on past referrals using historical data from the same (unspecified) area from 2010 to 2014. The Lasso regularised logistic regression included several hundred demographic features, but excluded race/ethnicity and disability status. The initial variable inclusion was chosen in agreement with implementing partners.

Predictions were binned into 20 equal-sized groups, to make it easier for the team to understand and use.

The randomised controlled trial setup

The researchers wanted to know how the algorithm score affected decision-making. What better way than with the gold-standard2 — a randomised controlled trial (RCT)? There are lots of ways to run an RCT, but they always involve some kind of random allocation to treatment, to reduce the bias that comes with self-selection, and to get a good, robust control group who could have been treated, but weren’t.

In this study, the decision to screen in applies to all children in a household, so reported households were randomised into whether or not the risk score could be seen by the team making the screening decision. Since households and families change in structure over time, the randomisation occurred at the biological mother level, so all children with the same biological mother shared the same treatment status.

The trial ran from November 2020 to March 2022, and focused on a child’s initial randomisation event in the time period. Overall, 4,213 referrals relating to 6,852 children (direct referrals and other children in reported households) were included.

Did the algorithm work?

The research investigated three outcomes of interest:

Does the tool reduce harm?

Does the tool affect racial bias?

Does the tool affect decision making?

1. Does tool availability reduce harm? Yes

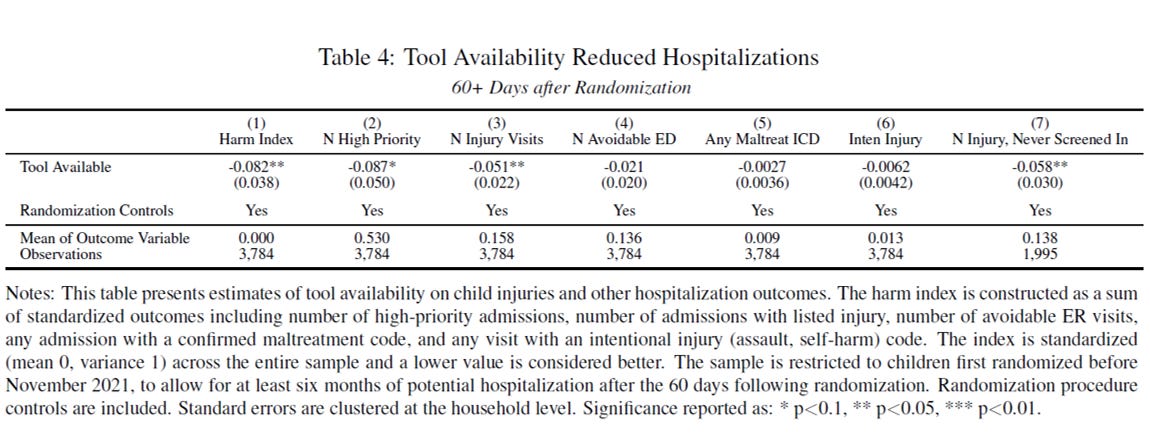

The researchers wanted to know, most importantly, if tool availability could reduce the harm done to children. Using hospitalisation data, they found compelling evidence that this was the case. In particular, the algorithmic tool reduced the average number of future injury admissions by 32% (0.051 relative to a mean of 0.158).

2. Does the tool affect racial bias? Yes

Algorithms trained on historical data could embed pre-existing human bias, or they could be less biased than the human decision-makers. In this study, the researchers found the tool reduced racial disparities for low-risk children, suggesting low-risk black children were previously disproportionately screened in.

3. How does the tool affect decision-making? Not how you would expect

Given the impressive results on outcomes like reducing harm, it would be easy to assume that the tool was used in the most obvious way — with the higher scoring children getting screened in more, and the lower scoring children getting screened in less. But this wasn’t the case. The tool surprisingly increased the team’s decisions to screen-in referrals the tool identified as low-risk, and reduced decisions to screen-in referrals identified as high-risk.

In exploring the reasons behind this, the researchers turned to the notes taken during the team discussions to make the screening decision. They carried out text analysis to see if the discussion content changed when the tool was available. They found that when the tool was available, the team were more likely to use phrases like “no allegation of abuse or neglect", “no mark or bruise", “physical abuse", and “defined by law no [abuse or neglect]" - that is, phrases directly relating to the allegation.

So when the tool was available, the worker discussions contained more phrases directly relating to the allegation. The researchers interpreted this difference as suggesting that tool use caused workers to focus on details of the present allegation, together with information from the algorithmic risk score, leading them to make better decisions than in the absence of the tool.

What makes a good predictive algorithm?

So the availability of the risk score reduced harm, reduced racial bias, and enhanced information use, albeit through mechanisms we might not have expected. But in thinking about how this applies more widely, the key lessons are in what makes a good predictive algorithm, and how they should be best evaluated. A good algorithm is:

trained on local data;

can predict outcomes it was not trained on; and

used to inform, not make, decision in high-stakes situations;

while a good evaluation should monitor a direct, harm-reducing outcome (like reduced hospitalisation) rather than tool compliance alone.

Computers, and the algorithms they implement, are playing an increasing role in all of our lives. But we need not fear all algorithms, even when applied to decisions of high sensitivity and with high stakes. Mills and Grimon’s robust evaluation of Rhema Vaithianathan’s algorithm demonstrates that well-designed and well-implemented decision-support algorithms can better allocate resources — both improving outcomes and reducing human bias.

By Olivia Wills

Kim H, Wildeman C, Jonson-Reid M, & Drake B. Lifetime Prevalence of Investigating Child Maltreatment Among US Children. Am J Public Health. 2017 Feb;107(2):274-280. doi:10.2105/AJPH.2016.303545. Epub 2016 Dec 20. PMID: 27997240; PMCID: PMC5227926.

Opinions differ on this. See Angus Deaton & Nancy Cartwright’s Understanding and misunderstanding randomized controlled trials (2018)

Arguably the crucial thing is this is a decision-support tool, not a decision-making tool.